Partnering with AI to Analyse Qualitative Data

When you can code qualitative data at speed, the potential for new insights grows dramatically. Data expert Mary-Ellen Gordon has put AI and chatbots to the test: can they handle the nuances of qualitative analysis?

Many researchers, social scientists, policy analysts, marketers and others have a love / hate relationship with qualitative data. Things such as answers to open-ended survey questions, submissions to policy consultations, social media posts, and other forms of text-based data can offer rich insights into the challenges and opportunities facing organisations, and yet traditionally extracting those insights has been a big pain.

The gold standard for analysing that type of data is to have three humans code each observation and then discuss and resolve differences. Even for an academic paper with a relatively small sample size that process is frustratingly time-consuming. At commercial scale, it’s completely unviable. Consequently, more often than many people probably want to admit, that type of data has gone un-analysed or at least under-analysed.

Over the past decade or two various software solutions have been developed to try to address this problem. Few of them were well integrated with the other types of software people use routinely, and getting even okay results tended to require a big investment in training of the human user, the software, or both. Modern AI has changed all of that. With whatever chatbot you already use you can code qualitative data at scale.

I tested this out with survey data I collected from people who graduated from Victoria University of Wellington (VUW) between 2018 and 2022. They were surveyed at the start of the year following their graduation and were asked about a variety of things, including: ‘What one thing do you most hope changes about New Zealand by 2040?” I have 2172 answers to that question, some of which are quite lengthy.

Fortunately, modern chatbots can read, process, and categorise those responses in minutes. The way that they do this varies from chatbot to chatbot, but the way humans think about that type of task also varies, so as anyone who has ever been involved in doing it knows, creation of a final categorisation system / coding framework typically requires discussion. You can also do that with your chatbot of choice.

For example, I first did this using Copilot (the chatbot from Microsoft). In its first pass Copilot created two categories that seemed to me to be means to ends — technology or policy — whereas my interest in that question was the end result graduates were hoping for in 2040 rather than the means of getting there. I explained this to Copilot — pretty similarly to the way I just did — which then redid the categories based on that feedback without the sighs or eye rolls you might get from a colleague you asked to repeat a task they had just completed. That time I was happy with the resulting categories.

From there I asked Copilot to classify each of the 2172 responses based on whether or not they were characteristic of each of the six themes we came up with together. A quick scan indicated it had done a creditable job, but as I said previously, the gold standard for this type analysis is to have three human coders, so I applied the same logic to chatbots and repeated this step with ChatGPT (Open AI’s chatbot) and Claude (Anthropic’s chatbot).

Overall, all three agreed on the coding for 87% of the 2172 responses across the six themes. For context, that would be considered a very high level of agreement for human coders. The greatest three-way agreement across any theme was 96% for responses that related to environmental sustainability and the least three-way agreement across any theme was 80% for a theme about social equality. Then again, humans have been known to disagree about that topic too, and the chatbots have been trained based on data created by us. For the final coding, I attributed a response to a theme if at least two out of three of the chatbots thought I should.

So what did this tell me about what VUW graduates hope will happen in New Zealand by 2040?

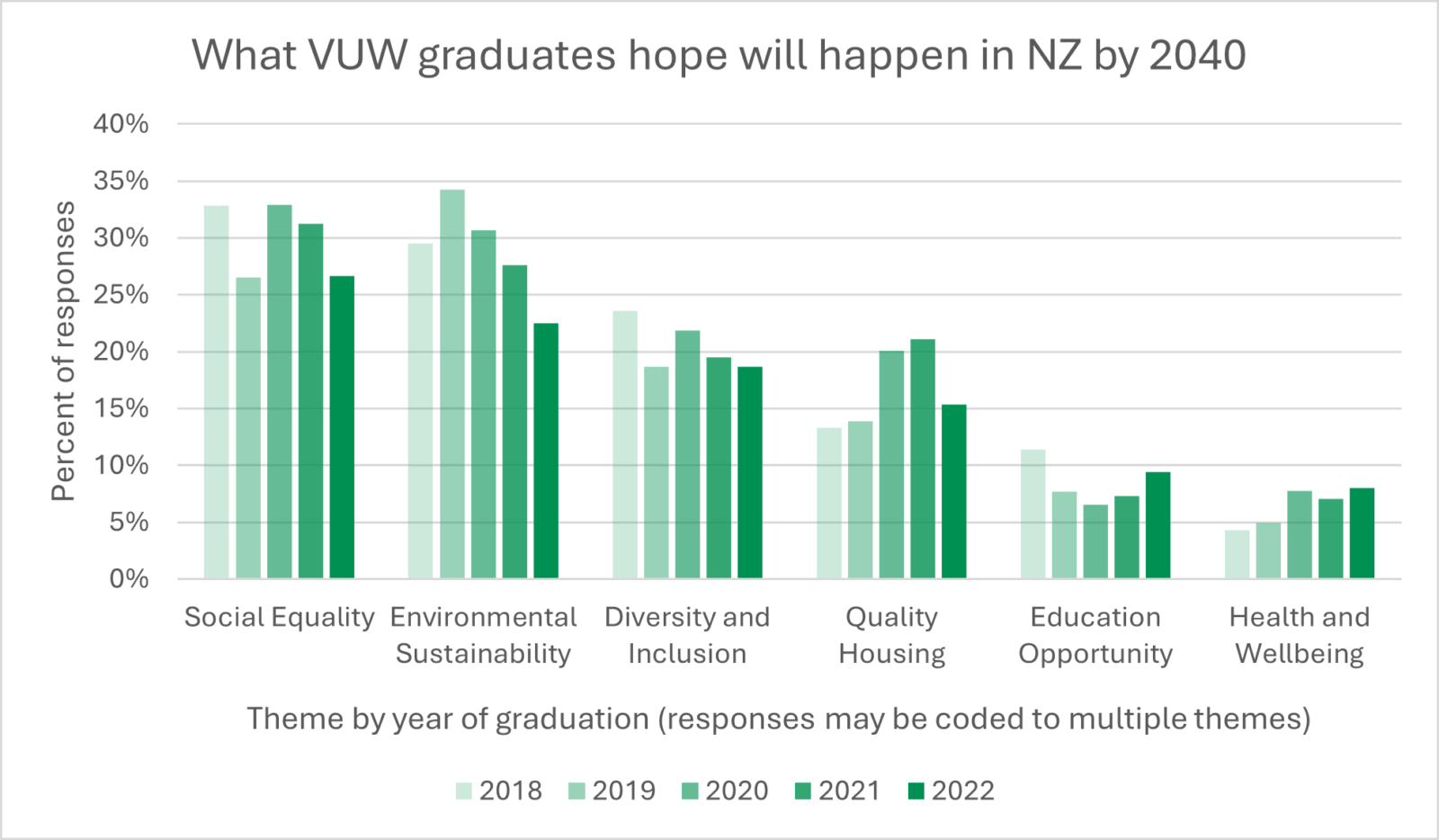

Over the years, responses most often touched on hopes for greater social equality (30% across all years) and environmental sustainability (29% across all years). As can be seen from the figure below however, graduate hopes changed to some degree over the years the survey was conducted. Responses citing hopes related to environmental sustainability peaked among 2019 graduates and dropped fairly percipitously after that. Housing-related hopes for New Zealand in 2040 peaked in 2020 and 2021.

Being able to code qualitative data so quickly opens up possibilities for deriving much greater value from that data. It makes it possible to summarise things such as the themes above without introduction of conscious or unconscious bias on the part of the analyst. It makes it possible to integrate the qualitative data with quantitative data, such as graduation years in the example above. It also makes it possible to filter responses to easily find those the match particular characteristics – for example to understand people’s aspirations for social equality in New Zealand in their own words or to see what graduates from 2020 and 2021 were saying about their hopes for the country’s housing stock.

Want to implement your own qualitative data analysis with the chatbot of your choice? Register for Mary-Ellen’s new half-day workshop, AI-driven Qualitative Data Analysis. Delivered as a practical workshop, you’ll learn a step-by-step method for qualitative data analysis through a series of guided exercises.

Register for one of Mary-Ellen’s other data courses:

Find more programmes that we offer

Contact usWe customise specific programmes for many New Zealand organisations – from short ‘in-house’ courses for employee groups, to executive education, or creating workshops within your existing programmes or events.